Is economics getting better? Yes. It is.

I attended two great conferences last week. The first one was an econ conference I co-organized with my friends and colleagues Dr Dejan Kovac and Dr Boris Podobnik, which featured three world-class economists from Princeton and MIT: professors Josh Angrist, Alan Krueger, and Henry Farber. The second was the annual American Political Science Association conference in San Francisco. I am full of good impressions from both conferences, but instead of talking about my experiences I will devote this post to one thing in particular that caught my attention over the past week. A common denominator, so to speak. Listening to participants present their excellent research in a wide range of fields, from economics to network theory (in the first conference), from political economy to international relations (in the second), I noticed an exciting trend of increasing usage of scientific methods in the social sciences. Methods like randomized control trials or natural experiments are slowly becoming the standard to emulate. I feel we are at the beginning of embracing the science into social science.

The emergence of the scientific method in the social sciences is not a new thing. I've been aware of it for quite some time now (ever since my Masters at LSE to be exact). However I am happy to see that many social scientists are now realizing the importance of making causal inference in their research. Especially among the young generation. It is becoming the standard. The new normal. Seldom can a paper get published in a top journal without conditioning on some kind of randomization in its research. This is not to say there aren't problems. Many young researchers still tend to overestimate the actual randomization in their research design, but the mere fact that they are thinking in this direction is a breakthrough. Economics has entered its own causal inference revolution.

It will take time before the social sciences fully embrace this revolution. We have to accept that some areas of economic research will never be able to make any causal claims. For example, a lot of research in macroeconomics is unfortunate to suffer from this problem, even though there is progress there as well. Also, the social sciences will never be as precise as physics or as useful as engineering. But in my opinion these are the wrong targets. Our goal should simply be to replicate the methods used in psychology, or even better - medicine. I feel that the economics profession is at the same stage today where medicine was about a hundred years ago, or psychology some fifty years ago. You still have a bunch of quacks using leeches and electric shocks, but more and more people are accepting the new causal inference standard in social sciences.

The experimental ideal in social sciences

How does one make causal inferences in economics? Imagine that we have to evaluate whether or not a given policy works. In other words we want to see whether action A will cause outcome B. I've already written about mistaking correlation for causality before. The danger is in the classical cognitive illusion: we see action A preceding outcome B and we immediately tend to conclude that action A caused outcome B (when explaining this to students I use the classic examples of internet explorer usage and US murder rates, average temperatures and pirates, vaccines and autism, money and sports performance, or wine consumption and student performance). However there could be a whole number of unobserved factors that could have caused both action A and outcome B. Translating this into the field, when we use simple OLS regressions of A on B we are basically only proving correlations, and cannot say anything about the causal effect of A on B.

In order to rid ourselves of any unobservable variable that can mess up our estimates we need to impose randomization in our sample. We need to ensure there is random assignment into treated and control units, where treated units represent the group that gets or does action A, while the control unit is a (statistically) identical group that does not get or do action A. And then we observe differences in outcomes. If outcomes significantly differ across the treatment and control groups, then we can say that action A causes outcome B. Take the example from medicine. You are giving a drug (i.e. treatment) to one group, the treatment group, and you are giving the placebo to the control group. Then you observe their health outcomes to figure out whether the drug worked. The same can be done in economics.

It is very important to have the observed units randomly assigned. Why? Because randomization implies statistical independence. When we randomly pick who will be in the treatment and who will be in the control group, we make sure that the people in each group are statistically indistinguishable one from another. The greater the number of participants, the better. Any difference in outcomes between the two similar groups should be a result of the treatment itself, nothing more and nothing less.

Positive trends

Social sciences now possess the tools to do precisely these kinds of experimental tests. On one hand you can run actual randomized control trials (i.e. field experiments) where you actually assign a policy to one group of people and not to the next (e.g. health insurance coverage) and then observe how people react to it, and how it affects their outcomes (whatever we want to observe, their health, their income, etc.). A similar experiment is being conducted to examine the effectiveness of basic income to lower unemployment and inequality. There are plenty other examples.

In addition to field experiments, we can do natural experiments. You do this when you're not running the experiment yourself, but when you have good data that allows you to exploit some kind of random assignment of participants into treatment and control units (in the next post I'll describe the basic idea behind regression discontinuity design and how it can be used to answer the question of whether grades cause higher salaries). Whatever method you use, you need to justify why the assignment into treatment and control units was random, or at least as-if random (more on that next time).

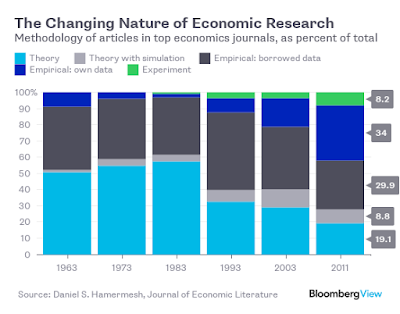

As I said in the intro, this is becoming the new standard. Long gone is the time where you can do theory and when empirical work was limited to kitchen-sink regressions (throw in as many variables as you can). Have a look at the following figure from Bloomberg (data taken from a recent JEL paper by Daniel Hamermesh):

The trend is clear. There is a rapid decline of papers doing pure theory (from 57% at its 1980s peak, to 19% today), a huge increase in empirical papers using their own data (from 2.4% to 34% today), and an even bigger increase of papers doing experiments (from 0.8% to 8.2%). This positive trend will only pick up over time.

Here's another graph from the Economist that paints a similar picture. This one disentangles the empirical part in greater detail. There is a big jump in the usage of quasi-experimental methods (or natural experiments) such as regression discontinuity and difference-in-difference methods ever since the late 90s. The attractiveness and usefulness of DSGE models has also seen a jump in DSGE papers in the same period, but this trend has slightly declined in the recent decade. Even more encouraging, since the 2000s randomized control trials have picked up, and in the last few years there has even been a jump in big data and machine learning papers in the field of economics. That alone is fascinating enough.

There are still problems however. The critics of such approaches begin to worry about the trickling down of the type of questions economists are starting to ask. Instead of the big macro issues such as 'what causes crises', we are focusing on a narrow policy within a subset of the population. There have been debates questioning the external validity of every single randomized control trial, which is a legitimate concern. If a given policy worked on one group of people in one area at one point in time, why would we expect it to work in an institutionally, historically or culturally completely different environment? Natural experiments are criticized in the same way, even when randomization is fully justified. Furthermore, due to an ever-increasing pressure to publish many young academics tend to overemphasize the importance of their findings or tend to overestimate their causal inference. There are still a lot of caveats that need to be held in mind while reading even the best randomized trials or natural experiments. This doesn't mean I wish to undermine anyone's efforts, far from it. Every single one of these "new methods" papers is a huge improvement over the multivariate regressions of the old, and a breath of fresh air from the mostly useless theoretical papers enslaved by their own rigid assumptions. Learning how to think like a real scientist is itself a steep learning curve. It will take time for all of us to design even better experiments and even better identification strategies to get us to the level of modern medicine or psychology. But we're getting there, that's for sure!

P.S. For those who want more. Coincidentally the two keynotes we had at our conference, Angrist and Krueger, wrote a great book chapter back in 1999 talking about all the available empirical strategies in labour economics. They set the standards for the profession by emphasizing the importance of identification strategies for causal relationships. I encourage you to read their chapter. It's long but it's very good. Also, you can find this handout from Esther Duflo particularly helpful. She teaches methods at Harvard and MIT, and she is one of the heroes of the causal inference revolution (here is another one of her handouts on how to do field experiments; see all of her work here). Finally, if you really want to dig deep in the subject there is no better textbook on the market than Angrist and Pischke's Mostly Harmless Econometrics. Except maybe their newer and less technical book, Mastering Metrics. As a layman, I would start with Metrics, and then move to Mostly Harmless. Also to recommend (these are the last ones), Thad Dunning's Natural Experiments in the Social Sciences, and Gerber and Green's Field Experiments. A bit more technical, but great for graduate students and beyond.

P.P.S. This might come as a complete surprise given the topic of this post, but I will be teaching causal inference to PhD students next semester at Oxford. Drop by if you're around.

Hi, I'm Diane C. Brown. I read your articles. I am interested to your blog tips. It's very helpful. This is modern age.It is becoming the standard. The new normal. Seldom can a paper get published in a top journal without conditioning on some kind of randomization in its research. This is not to say there aren't problems. Many young researchers still tend to overestimate the actual randomization in their research design, but the mere fact that they are thinking in this direction is a breakthrough. http://onedaytop.com/mars-study-gives-clues-origins-life/

ReplyDelete